Biometric categorisation refers to the use of AI systems to classify individuals based on their biometric characteristics, such as age, gender, ethnicity, or other physiological traits. Under the EU AI Act, this use case is treated as high-risk, given its potential to perpetuate discrimination, stereotyping, and rights violations. Strict legal controls and transparency requirements apply, especially in public or sensitive environments.

1. Background and Establishment

Biometric categorisation systems use artificial intelligence to infer or label attributes such as race, sex, age, emotion, or cultural background based on biometric data (e.g., facial scans, iris patterns, gait analysis). These systems are deployed across domains—from advertising to access control and surveillance.

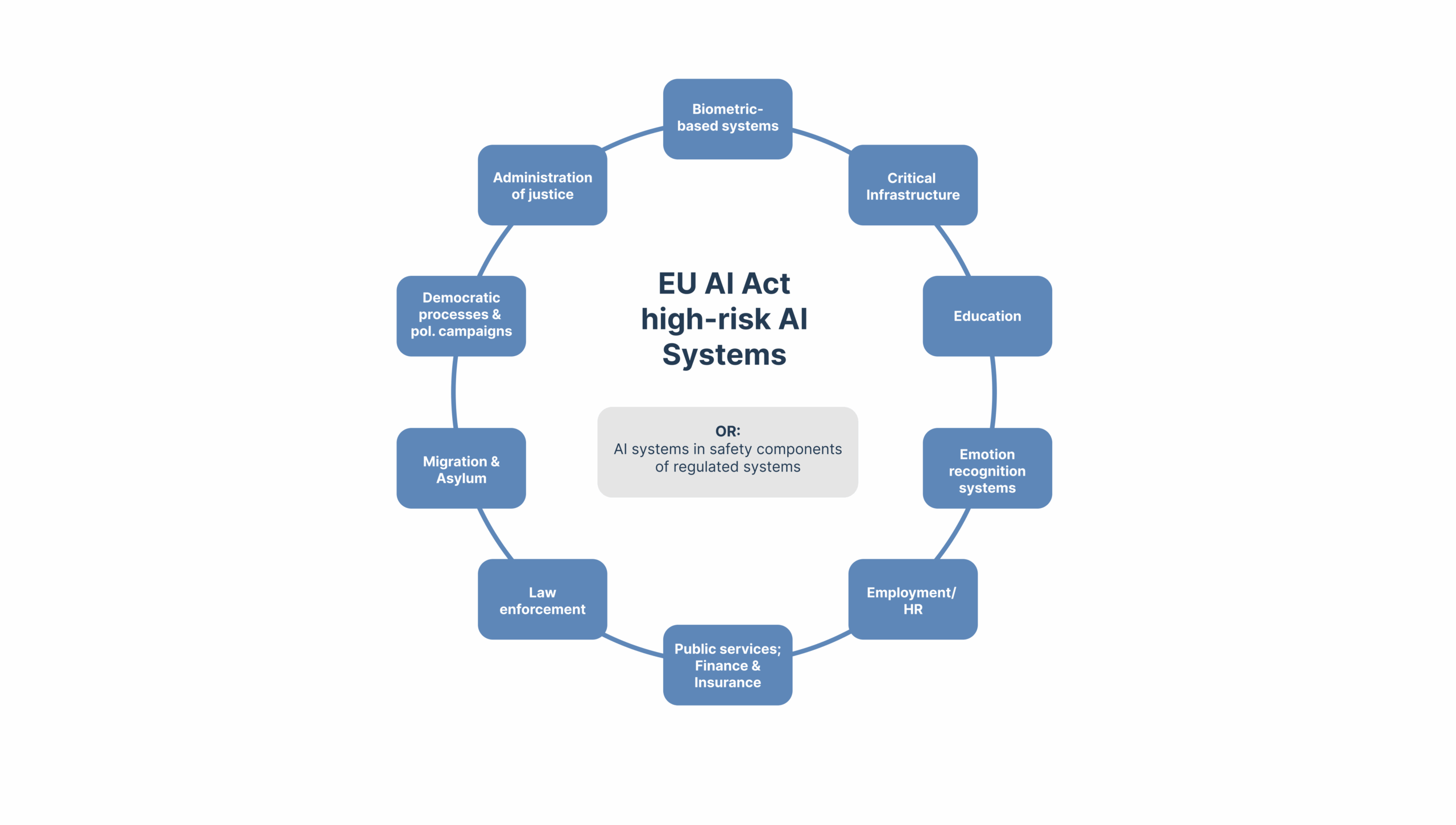

However, the application of biometric categorisation raises profound legal and ethical concerns. Classifying individuals by perceived characteristics introduces risks of bias, unjust profiling, and systemic discrimination. The EU Artificial Intelligence Act responds to these concerns by classifying such systems as high-risk or even prohibited under certain circumstances.

2. Purpose and Role in the EU AI Ecosystem

The EU AI Act treats biometric categorisation as a sensitive AI function because:

- It affects human dignity, autonomy, and privacy

- It may entrench racial or gender-based disparities

- It can enable mass surveillance and social manipulation

- It often operates with low accuracy and weak scientific validity

The goal is not to outlaw all biometric categorisation, but to severely restrict its use, demand rigorous oversight, and ensure proportionality in deployment.

3. Key Contributions and Risks

Biometric categorisation systems pose several critical risks:

- Discriminatory outcomes due to biased training data

- Misclassification leading to exclusion or harm

- Opacity—users often unaware they are being classified

- Function creep—technology being repurposed beyond original scope

- Fundamental rights infringement, especially under the Charter of Fundamental Rights of the EU

At their worst, these systems can reproduce social inequalities through code.

4. Connection to the EU AI Act and the EU AI Safety Alliance

The EU AI Act regulates biometric categorisation through:

- Article 5(1)(c) – Prohibits AI systems that categorise individuals based on sensitive biometric features in public spaces when used for inferring race, political opinion, religion, or sexual orientation

- Annex III – Classifies other biometric categorisation systems as high-risk, especially when used in employment, education, or law enforcement

- Article 10 & 13 – Enforce data governance and transparency in biometric data use

- Article 61 – Requires post-market monitoring for performance and discriminatory impacts

The EU AI Safety Alliance assists providers and deployers by:

- Conducting bias and fairness assessments

- Offering technical and legal checklists for sensitive categorisation functions

- Supporting data representativeness reviews

- Advising on transparency notices and user rights protections

Using these tools helps ensure systems meet legal thresholds and ethical expectations.

5. Stakeholder Responsibilities

Effective governance of biometric categorisation involves:

- Developers – Ensuring AI systems do not encode or reinforce discriminatory structures

- Deployers – Justifying necessity and proportionality of use, especially in public or regulated domains

- Data protection officers (DPOs) – Reviewing GDPR compliance and special category data usage

- Legal teams – Evaluating risk under the EU AI Act and Charter rights

- Human rights experts and civil society – Monitoring use and advocating for safeguards

No single actor can ensure ethical biometric classification—it requires cross-sector vigilance.

6. Legal and Technical Conditions for Use

To lawfully use biometric categorisation systems under the EU AI Act:

- The system must not be used for sensitive attribute inference in public spaces (if so, it may be prohibited)

- It must undergo risk classification and, if high-risk, pass a conformity assessment

- Training data must be diverse, representative, and subject to quality checks

- The system must be transparent—users should know when and how classification occurs

- Providers must implement bias monitoring and mitigation controls

- Human oversight must be guaranteed

- Technical documentation (Annex IV) must include justification for system design and risk controls

Failing to meet these criteria may result in market withdrawal, fines, or reputational damage.

7. How to Approach Biometric Categorisation Responsibly

Organizations that wish to use biometric categorisation must:

- Assess legality and necessity of classifying biometric features

- Avoid any classification linked to prohibited grounds, especially in public spaces

- Conduct a fundamental rights impact assessment

- Engage with the EU AI Safety Alliance for compliance guidance

- Use auditable datasets and models, with performance disaggregated by demographic groups

- Offer user recourse, including opt-outs and rights to explanation

- Monitor system impacts continuously and transparently

The priority is not efficiency or novelty—but fairness, safety, and social legitimacy.