EU AI Act

Stay ahead—prepare for the EU AI Act now

EU AI Act summary

In March 2024, the European Union (EU) enacted the EU AI Act, the world’s most comprehensive AI legislation, with enforcement beginning in late 2024 and extending into 2025. The Act aims to address the negative impacts of rapidly deployed high-risk AI systems while fostering innovation.

This groundbreaking regulation is poised to become a global benchmark, offering a responsible AI framework that mitigates issues such as bias, data leakage, and risks posed by both standalone and composite AI systems, including predictive AI and Generative AI models like ChatGPT.

The regulation balances safety and innovation, positioning Europe as a leader in responsible AI governance.

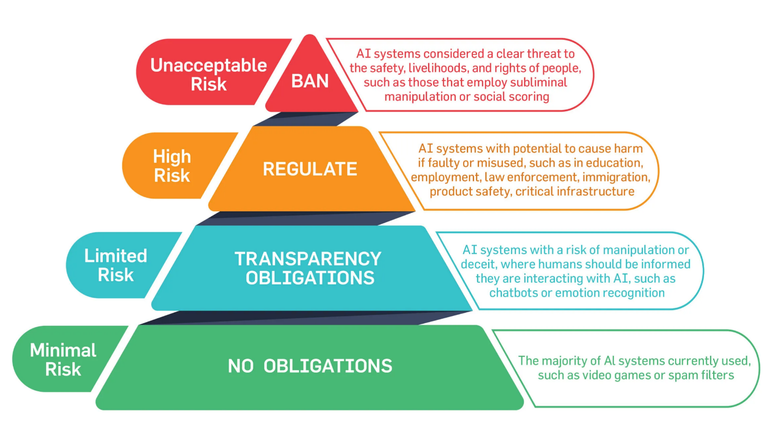

Risk-Classifications according to the EU AI Act

High-risk AI systems are the most regulated category allowed in the EU market. This classification includes safety components of already regulated products and stand-alone AI systems in specific areas (outlined below) that could adversely impact health, safety, fundamental rights, or the environment. These systems pose a significant risk of causing harm if they fail or are misused. A detailed explanation of what constitutes a high-risk AI system will follow in the next section.

The third level of risk is limited risk, which applies to AI systems with potential for manipulation or deceit. Systems in this category must adhere to transparency requirements, ensuring that users are informed about their interaction with AI (unless it is self-evident). Additionally, deep fakes must be clearly identified. For example, chatbots are classified as limited-risk systems, a designation particularly relevant for generative AI and its outputs.

The lowest risk level defined by the EU AI Act is minimal risk. This category covers AI systems not included in the aforementioned classifications, such as spam filters. Minimal-risk systems are free from restrictions or mandatory obligations, though it is recommended to adhere to general principles like human oversight, non-discrimination, and fairness.

Do I need to comply with the EU AI Act?

If your company develops, markets, or uses AI systems in the EU, or if your AI system’s outputs are used within the EU, you must comply with the EU AI Act. The Act applies to anyone providing, deploying, or placing AI systems on the EU market. It is crucial to identify your role and responsibilities, especially if you work with high-risk AI systems, and ensure compliance with the Act’s requirements.

To simplify this process, we have developed a Self-Assessment Tool. This tool helps you identify the risk level of your AI use case and understand the obligations you may need to meet under the AI Act.

To simplify this process, we have developed a Self-Assessment Tool. This tool helps you identify the risk level of your AI use case and understand the obligations you may need to meet under the AI Act.

Penalties / impacts of non-compliance

Non-compliance with the EU AI Act can lead to substantial penalties:

Severe Infringements: Engaging in prohibited AI practices may result in fines up to €35 million or 7% of the company’s total worldwide annual turnover for the preceding financial year, whichever is higher.

Other Violations: Breaches of obligations under the Act can incur fines up to €15 million or 3% of the total worldwide annual turnover, whichever is higher.

Providing False Information: Supplying incorrect, incomplete, or misleading information to notified bodies or national competent authorities can lead to fines up to €7.5 million or 1% of the total worldwide annual turnover, whichever is higher.

These penalties underscore the importance of adhering to the EU AI Act’s requirements to avoid significant financial repercussions.